Pipeline II

The GLAST *pipeline* is a software mechanism for organizing and executing massively parallel computing projects. Internally, the pipeline consists of a server application, web applications, Unix commands, and Oracle tables. Externally, the pipeline offers a general framework within which to organize and execute the desired data processing.

Organizational Concepts

Main organizational concepts of the pipeline include:

- Task (and subtask) - A top level definition of work to be performed by the pipeline. A task may consist of one or more processes, and zero or more nested subtasks. Tasks are defined by an XML file.

- Stream (and substream) - A stream represents a single request to run the processes within a task. Streams always have an associated stream number which must be unique within each task. The stream number is always set at create stream time, either explicitly by the user or implied.

- Process - A single step within a task or subtask. Each process must be either a script or a job.

- Script - A process which results in a script being run inside the pipeline server. These small scripts are typically used to perform simple calculations, set variables, create subtasks, or make entries in the data catalog. Scripts can call functions provided by the pipeline, as well as additional functions for adding entries to the data catalog.

- Job - A process which results in a batch job being run.

- Variables - Pipeline variables can be defined in a pipeline XML file, either at the task level, or at the level of individual processes. They can also be defined at create stream time. Processes inherit variables from:

- The task which contains them.

- Any parent task of the task which contains them.

- Any variables defined at "create stream" time.

- Variables defined at create stream time.

Task Services

Task services offered by the pipeline include:

- DataCatalog

- Rules-based scheduler

- User variables for intraTask communication

- Express Python scripting (e.g., dynamic stream creation, and DB activities)

- Interface to batch system

- Signal handling

- Support for off-site processing

Operator Services

Operator services offered by the pipeline include:

- Web interface

- Task/Stream management

- Task/Stream Status

- Task/Stream plotted statistics

- Unix command-line interface

- Processing history database

Using the Pipeline

Basic steps for using the pipeline:

- Prepare, in advance, all data processing code in a form that can run unattended (non-interactively) and autonomously.

This typically consists of one or more commands, with optional parameters or other environmental setup.

- Design a Task Configuration file, consisting of an .xml file which defines: processing steps, execution rules, and datasets to be cataloged.

- Submit the Task Configuration file to the pipeline server via the Web interface.

Note: Admininistrative privileges are required.

- Create one or more top-level streams.

This action instantiates new stream objects in the pipeline database and commences the execution of that stream. Once a stream has been created, its progress can be tracked using the web interface (Task Status and other pages). New streams may be created using either a web page or Unix commands.

- Rinse, and repeat, as necessary.

- Streams requiring manual intervention may be restarted (rolled

back) using the web interface.

Web Interface

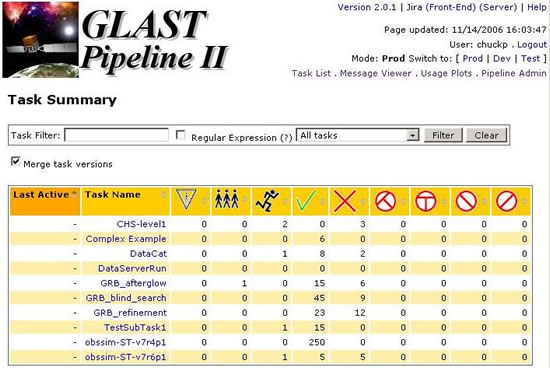

From the Glast Ground Software Portal, click on Pipeline II. The Task Summary page will be displayed:

From the Task Summary, you can:

- Monitor a task by simply entering the task name as a filter.

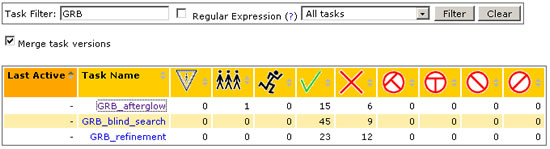

- Enter a task filter for a group of tasks (e.g., GRB) and, from a pulldown menu, select the type of task to which it is applied (e.g., All Tasks, Tasks with Runs, Tasks without Runs, Tasks with Active Runs, and those Active in Last 30 Days).

Note: When you click on the Clear button, the default list (All tasks) will be displayed.

- Drill down to successive layers of detail by clicking on the links within respective columns.

If a task has failed, you can drill down from the task name, checking the status column until you find the stream that failed; then check the log file for that stream (accessible from the links located in the right-most column).

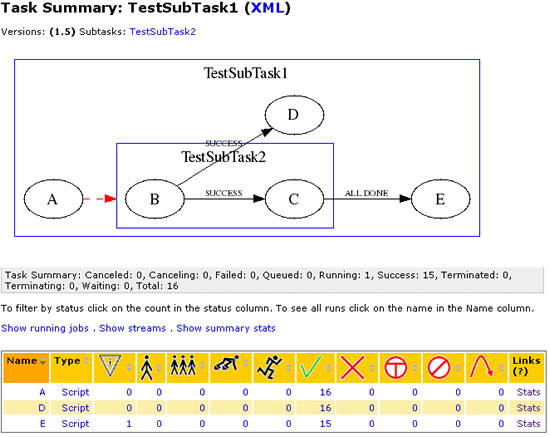

If a task is running, a Task Dependency Flow Chart (see below) will be displayed when you click on the task Name (e.g., TestSubTask1):

- Access the Message Viewer and Usage Plots and switch between pipeline II Modes (Production, Development and Test):

Note: If you have SLAC Unix or Windows userid/password, you can also Login to Pipeline Admin.

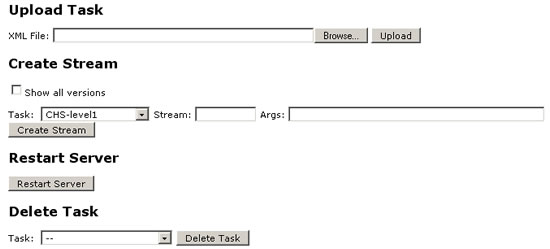

Pipeline Admin

From the Pipeline Admin GUI, you can upload a pipeline task, create a stream, restart the server, and delete a task:

Note: To test a new version of a script, upload and test the new script on the test pipeline server.

XML Schema

Note: When editing an XML file for the pipeline, you are encouraged to use an editor which can validate XML files against XML schema, since this will save you a lot of time. EMACS users may be interested in this guide to using XML with EMACS.

- The full pipeline II schema is available at:

http://glast-ground.slac.stanford.edu/Pipeline-II/schemas/2.0/pipeline.xsd

- To view the XML for any task listed in the Pipeline II Task List, first click on the Task Name you are interested in, then click on the XML link in the Task Summary Header on the task page; for example:

http://glast-ground.slac.stanford.edu/Pipeline-II/xml.jsp?task=22980

- The maven generated pipeline II web site contains full documentation on the XML schema automatically generated from the source.

Also see: Possible XML for pipeline II

Batch Job Environment Variables

Batch jobs always have the following environment variables set:

PIPELINE_PROCESSINSTANCE Internal database id of the process instance PIPELINE_STREAM Stream number PIPELINE_STREAMPATH Stream path - for a top level task this will be the same as the stream number; for subtasks this will be of the form i.j.k PIPELINE_TASK Task name PIPELINE_PROCESS Process name

Command Line Tools

For details on using the Pipeline II client, enter:

|

which currently gives: |

Syntax:

pipeline <command> <args>

where command is one of:

createStream <task> <stream> <env>

where <task> is the name of the task to create (including optional

version number)

<stream> is the stream number to create.

<env> are environment variables to pass in, of the form

var+value\{,var=value...\} |

|

Example:

~glast/pipeline-II/pipeline createStream CHS-level1 2 "downlinkID=060630001, |

Pipeline Objects

"pipeline" Object - Provides an entry point for communicating with the pipeline server in script processes; functionality available at the time of this writing is summarized below:

pipeline API |

"datacatalog" Object - Provides an entrypoint for communicating with the datacatalog service in script processes; functionality available at the time of this writing is summarized below:

datacatalog API

registerDataset(String dataType, String logicalPath, String filePath)

|

Registers a new Dataset entry with the Data Catalog.

dataType Character string specifying the type of data contained in the file. Examples include: MC, DIGI, RECON, MERIT.

This is an enumerated field, and must be pre-registered in the database. A Pipeline-II developer can add additional values upon request.

logicalPath Character string representing the location of the dataset in the virtual directory structure of the Data Catalog.

This parameter contains three fields: "folder", (opitonal) "group", and dataset "name".

Encoding is "/path/to/folder/group:name" -- if the optional croup specification is omitted, the encoding is "/path/to/folder/name".

Example: /ServiceChallenge/Background/1Week/MC:000001 represents a dataset named "000001" stored in a group named "MC" within the folder "/ServiceChallenge/Background/1Week/". Example: /ServiceChallenge/Background/1Week/000001 represents a dataset named "000001" stored directly within the folder "/ServiceChallenge/Background/1Week/". filePath Character string representing the physical location of the file.

This parameter contains two fields: the "file path on disk" and the (optional) "site" of the disk cluster.

Encoding is "/path/to/file@SITE". The default site is "SLAC".

Also See:

- Pipeline II User's Guide (Confluence)

| Owned by: | Tony Johnson and Tom Glanzman |

| Last updated by: Chuck Patterson 11/15/2006 |